To prepare for the 2020 presidential election, a Facebook researcher designed a study to investigate whether the platform’s automated recommendations could expose users to misinformation and polarization. The experiment showed how quickly the social media site suggested extreme, misleading content from both ends of the political spectrum.

Two test profiles created

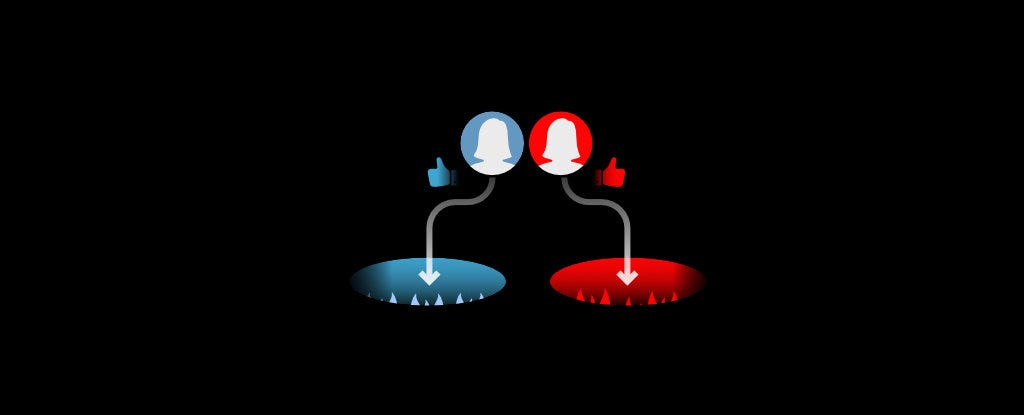

The researcher created user profiles for two 41-year-old North Carolina moms. They had similar interests, except one was conservative and the other was liberal. The researcher then watched to see what surfaced in the accounts' News Feeds and recommended groups and pages.

Reports describing the experiments are among hundreds of documents disclosed to the Securities and Exchange Commission and provided to Congress in redacted form by attorneys for Frances Haugen[1], a former Facebook employee. The redacted versions were obtained by a consortium of 17 news organizations, including USA TODAY.

Facebook Papers: Two experimental Facebook accounts show how the company helped divide America[2]

Researcher chooses mainstream media, politicians

Week one

Political recommendations soon started to appear in both feeds. Some suggestions for the conservative user, described in a report called "Carol's Journey to QAnon," already contained misinformation and partisan content.

Within two days, recommendations for Carol's account began to lean towards conspiracy content, the researcher found. In less than a week, Facebook's automated recommendations included QAnon, a wide-ranging extremist ideology founded on the allegation that Democrats and celebrities are involved in a satanic, pedophile cult. The researcher did not follow that page.

Week two

By the second week of the experiment, both accounts were full of political content, with misleading posts coming from recommended pages and groups. Carol, the conservative user, was shown more extreme and graphic content, the researcher found, and she received a push notification for a false story posted in a group.

The liberal account, described in a report called "Karen & the Echo Chamber of Reshares," chiefly featured mainstream news article posts. But that user's feed also contained false and misleading posts from the political meme pages and groups the researcher followed.

Week three

Polarizing political content was ever-present in both users' feeds as the Facebook experiment continued. Several misleading memes appeared in the feed for Karen, the liberal user, in response to mass shootings in El Paso, Texas, and Dayton, Ohio.

Carol, the conservative user, was fed conspiracy-laden content, including some sort of watch party in a group called "We Love Barron Trump," the researcher found. That feed contained a video promoting the Proud Boys, a hate group banned from Facebook.

The researcher concluded the conservative user's feed "devolved to a quite troubling, polarizing state in an extremely short amount of time." The feed for the liberal user was similar, though not as severe, the researcher wrote. In both cases, the driver was Facebook's automated recommendations.

Among the researcher's recommendations: Retooling Facebook's Recommended Pages module and excluding groups with known conspiracy references and those tagged for spam behavior.

A Facebook spokesman said Facebook adopted some of the recommendations this year, such as eliminating the "like" button in the News Feed for pages that had violated the company's rules but had not yet been removed from the platform.

Contributing: Jessica Guynn

Published Updated

References

- ^ Frances Haugen (www.usatoday.com)

- ^ Two experimental Facebook accounts show how the company helped divide America (www.usatoday.com)

- ^ Sign up (profile.usatoday.com)

from GANNETT Syndication Service https://ift.tt/3w0pOAQ

Post a Comment

Post a Comment